Generative AI has exploded in popularity, powering innovations like ChatGPT, MidJourney, and autonomous agents. As companies race to adopt this technology, the demand for skilled Generative AI Engineers is at an all-time high.

Whether you’re preparing for your first interview or targeting top-tier companies like OpenAI, Anthropic, or Meta, this comprehensive guide of 21 real-world interview questions and answers will help you stand out.

Read Also: Ai Agent vs Agentic AI QnA

1. What is Generative AI and how does it differ from traditional AI?

Answer:

Generative AI refers to models that can create new data similar to the data they were trained on. Unlike traditional AI, which focuses on classification or prediction (e.g., identifying spam emails), generative models can produce novel outputs like images, text, music, and code.

For example:

- Traditional AI: Detects whether an image contains a cat.

- Generative AI: Creates an entirely new image of a cat.

Key differences:

- Generative AI uses architectures like GANs, VAEs, and Diffusion Models, while traditional AI often uses discriminative models.

- It requires careful control of creativity vs. realism (e.g., avoiding hallucinations in text generation).

2. Explain how transformers revolutionized Generative AI.

Answer:

Transformers, introduced in the 2017 paper “Attention Is All You Need”, solved key challenges in sequential data processing:

- They replaced RNNs and LSTMs by introducing the self-attention mechanism, allowing the model to weigh the importance of each token in a sequence.

- Transformers enabled parallelization, reducing training time drastically.

This architecture paved the way for large language models (LLMs) like GPT, BERT, and T5. Their ability to handle vast amounts of text data and generate coherent responses made them the foundation of modern generative AI.

3. What are the most common architectures used in Generative AI?

Answer:

- GANs (Generative Adversarial Networks) — Used for realistic image generation.

- VAEs (Variational Autoencoders) — Useful for learning latent representations and generating smooth data distributions.

- Diffusion Models — Powering tools like DALL·E 3 and Stable Diffusion.

- Transformers — Dominant in text generation and multimodal tasks.

- Flow-based Models — For exact likelihood estimation in data generation.

Each has strengths:

- GANs excel at high-quality image synthesis but can suffer from training instability.

- Transformers dominate natural language generation due to scalability.

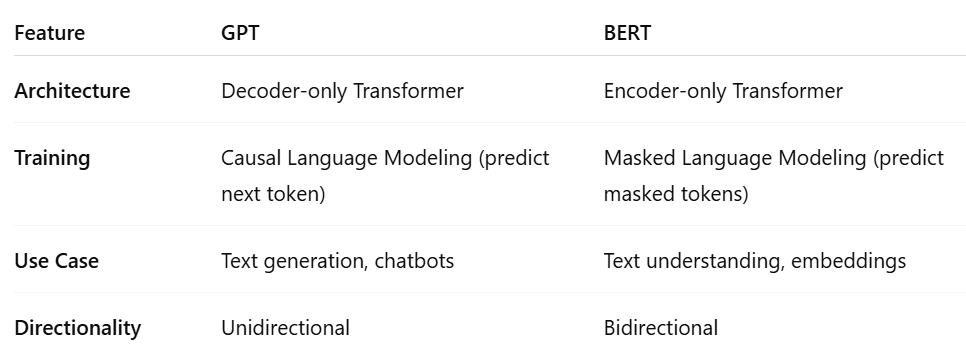

4. What is the difference between GPT and BERT?

Answer:

5. How do you prevent hallucinations in LLMs?

Answer:

Hallucinations are outputs that sound plausible but are factually incorrect. To mitigate them:

- Reinforcement Learning with Human Feedback (RLHF): Aligns outputs with human preferences.

- RAG (Retrieval-Augmented Generation): Combines LLMs with external knowledge sources to ground responses.

- Prompt engineering: Using clear, constrained prompts reduces hallucination risk.

- Fine-tuning: Training the model on domain-specific data improves accuracy.

6. Can you explain the concept of a latent space in generative models?

Answer:

Latent space is a compressed representation of data learned by models like VAEs or GANs.

- Think of it as a “map” where similar data points are located near each other.

- Sampling points from this space and decoding them allows the model to generate new but similar data.

Example: In a VAE trained on faces, moving slightly in latent space might generate a face with slightly different hair or expression.

7. What is a diffusion model and why are they popular?

Answer:

Diffusion models work by adding noise to training data and then learning to reverse this process.

- They are stochastic models that excel at generating high-fidelity images.

- Tools like Stable Diffusion and DALL·E 3 use this approach for state-of-the-art image synthesis.

Advantages:

- Produces diverse and high-resolution outputs.

- Avoids mode collapse issues common in GANs.

8. How do you fine-tune a large language model?

Answer:

There are two main approaches:

- Full fine-tuning: Adjusts all weights of the pre-trained model, requiring significant compute resources.

- Parameter-efficient fine-tuning (e.g., LoRA, adapters): Updates only a small subset of parameters.

Steps:

- Collect domain-specific data.

- Preprocess and tokenize data appropriately.

- Choose a fine-tuning method based on available compute and task requirements.

- Monitor for overfitting by evaluating on a validation set.

9. What is RLHF and why is it important?

Answer:

Reinforcement Learning with Human Feedback (RLHF) is a training paradigm used to align LLM outputs with human values and expectations.

- Step 1: Pre-train the LLM on a large dataset.

- Step 2: Fine-tune it with supervised learning.

- Step 3: Use RLHF, where human preferences shape the reward signal to guide further training.

This method is crucial in making models like ChatGPT safer and more helpful.

10. How would you implement a Retrieval-Augmented Generation (RAG) system?

Answer:

RAG architecture combines retrieval and generation:

- Retrieve: Use a vector database (e.g., Pinecone, FAISS) to fetch relevant documents.

- Augment: Append the retrieved context to the user’s query.

- Generate: Pass the augmented prompt to an LLM to generate grounded responses.

Applications:

- Customer support chatbots.

- Legal document summarization.

11. What are some ethical challenges in deploying Generative AI systems?

Answer:

Generative AI poses unique ethical risks:

- Bias amplification: Models trained on biased datasets can produce discriminatory outputs.

- Deepfakes: Misuse in creating fake videos and voices.

- Misinformation: LLMs might generate factually incorrect content confidently.

Mitigation strategies:

- Regular audits of training data.

- Human-in-the-loop systems for moderation.

- Transparency in how outputs are generated.

Companies like OpenAI and Anthropic now focus on AI alignment to address these challenges.

12. What is prompt engineering and why is it important?

Answer:

Prompt engineering is the practice of crafting effective instructions to guide LLMs toward desired outputs.

Why it matters:

- LLMs are highly sensitive to input phrasing.

- A well-crafted prompt reduces hallucinations and improves relevance.

Example:

“Summarize this text in 50 words for a 5th grader.”

“Tell me about this text.”

In production systems, few-shot prompting and chain-of-thought prompting are often used for complex tasks.

13. How do you evaluate the performance of a generative model?

Answer:

Evaluation is challenging since there is no single “correct” output. Common methods include:

- Quantitative Metrics:

- BLEU, ROUGE: Compare generated text to reference texts.

- Fréchet Inception Distance (FID): Used for image generation.

- Perplexity: Measures confidence in predicting next tokens.

- Human Evaluation:

- Judges fluency, relevance, and creativity.

- Task-Specific Tests:

- For chatbots: Use truthfulness and helpfulness benchmarks.

14. Explain how LoRA works for fine-tuning large models.

Answer:

LoRA (Low-Rank Adaptation) is a parameter-efficient fine-tuning method.

- Instead of updating all weights in a large model, LoRA injects small trainable matrices into each layer.

- It reduces memory and compute requirements dramatically.

Benefits: Fine-tuning a 70B model becomes possible on a single GPU.

Applications: Domain adaptation for industries like healthcare and finance.

15. What is the role of a vector database in RAG systems?

Answer:

A vector database stores embeddings of documents for efficient similarity search.

- When a user asks a question, the system encodes it into a vector and retrieves the most relevant documents.

- Tools like Pinecone, Weaviate, and FAISS enable scalable retrieval for millions of documents.

This retrieval step ensures that LLMs work with grounded context, reducing hallucinations.

16. How would you scale a Generative AI system to serve millions of users?

Answer:

Key considerations:

- Model serving: Use optimized inference engines like TensorRT or DeepSpeed.

- Sharding: Split large models across multiple GPUs or nodes.

- Caching: Cache common queries to reduce repeated computation.

- Load balancing: Distribute traffic across servers.

- Monitoring: Track latency, throughput, and errors for reliability.

17. What is chain-of-thought reasoning in LLMs?

Answer:

Chain-of-thought (CoT) reasoning involves prompting a model to break down its reasoning into intermediate steps.

Example:

Question: “If John has 3 apples and buys 2 more, how many does he have?”

CoT Output:

- John starts with 3 apples.

- He buys 2 more.

- Total apples = 3 + 2 = 5.

Benefits: Improves accuracy for multi-step reasoning tasks.

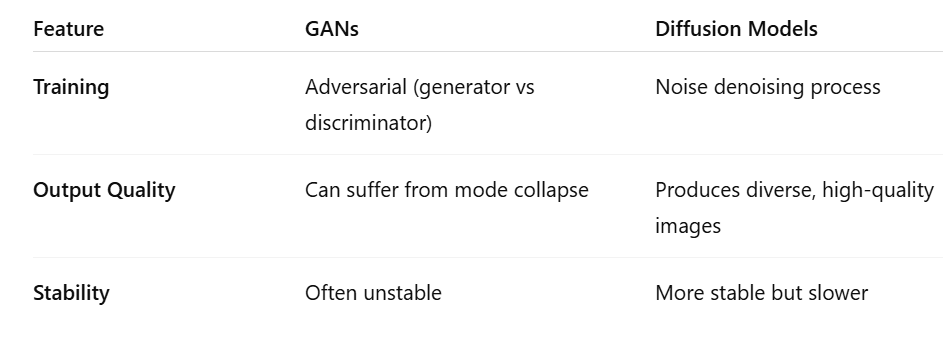

18. How do diffusion models compare to GANs?

Diffusion models are now preferred for applications like image and video generation because they are more robust and controllable.

19. How would you detect and mitigate prompt injection attacks?

Answer:

Prompt injection occurs when malicious users craft inputs to override system instructions.

Detection strategies:

- Input sanitization to strip harmful instructions.

- Restrict access to sensitive APIs and data.

- Use model sandboxing to limit potential damage.

Mitigation:

- Combine LLMs with rule-based systems for safety.

- Monitor inputs and outputs for anomalies.

20. What is a multimodal model? Can you give an example?

Answer:

Multimodal models process and generate multiple types of data (e.g., text, images, audio).

Example:

- GPT-4 Vision: Understands both text and images.

- CLIP: Connects images with textual descriptions.

Use cases:

- Visual question answering.

- Text-to-image generation (e.g., DALL·E).

21. What are some real-world applications of Generative AI in enterprises?

Answer:

- Customer Support: AI chatbots for 24/7 service.

- Marketing: Auto-generating ad copy and visuals.

- Healthcare: Assisting doctors with diagnostic suggestions.

- Finance: Fraud detection and personalized investment advice.

- Media: Content creation for blogs, videos, and podcasts.

The key to enterprise adoption is balancing creativity with accuracy, compliance, and safety.

Final Thoughts

Generative AI is reshaping industries, and companies are actively looking for engineers who understand both the theory and practical deployment challenges.

To ace your interview:

- Stay updated with cutting-edge research.

- Practice designing systems that balance scalability, ethics, and efficiency.

- Demonstrate hands-on skills by building and deploying small projects.

Written by Ritesh Sinha

👋 Hi, I’m Ritesh Sinha Generative AI Engineer | AI Content Creator | Helping people decode the future of artificial intelligence.

Ritesh Sinha