As the demand for professionals with LLM expertise grows, this article provides a comprehensive set of interview questions and answers, covering fundamental concepts, advanced techniques, and practical applications.

If you’re preparing for a job interview or simply want to expand your knowledge, this article will be useful.

Basic LLM Interview Questions

To understand LLMs, it’s important to start with the fundamental concepts. These foundational questions cover essential aspects such as architecture, key mechanisms, and typical challenges, providing a solid base for learning more advanced topics.

Table of Contents

What is the Transformer architecture, and how is it used in LLMs?

The Transformer Architecture: A Revolution in Language Modeling

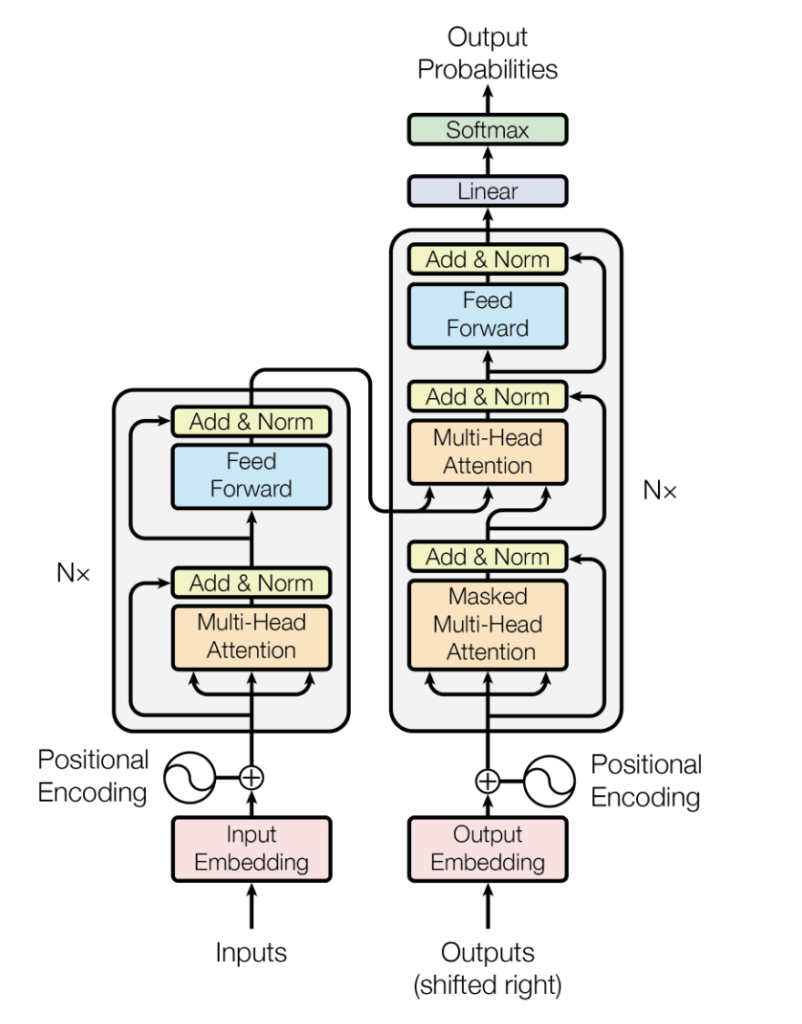

The Transformer architecture, introduced in the paper “Attention Is All You Need,” has revolutionized the field of natural language processing (NLP) and forms the backbone of many large language models (LLMs). Unlike traditional recurrent neural networks (RNNs) and long short-term memory (LSTM) networks, which process sequences sequentially, Transformers process the entire input sequence in parallel, making them more efficient for long-range dependencies.

Key Components of the Transformer Architecture

- Encoder-Decoder Structure:

- Encoder: Processes the input sequence and generates a contextual representation.

- Decoder: Generates the output sequence, conditioned on the encoder’s output.

- Self-Attention Mechanism:

- The core of the Transformer’s power.

- Allows the model to weigh the importance of different parts of the input sequence when processing a specific position.

- This enables the model to capture long-range dependencies effectively.

- Multi-Head Attention:

- Multiple attention mechanisms operate in parallel, allowing the model to focus on different aspects of the input sequence simultaneously.

- Positional Encoding:

- Since Transformers process inputs in parallel, they need a way to incorporate positional information.

- Positional encoding adds information about the relative or absolute position of each token in the sequence.

How Transformers are Used in LLMs

Large Language Models (LLMs) like GPT-3 and BERT leverage the Transformer architecture to achieve state-of-the-art performance on a variety of NLP tasks. Here’s how:

- Pre-training on Massive Text Corpora:

- LLMs are trained on massive amounts of text data to learn patterns, grammar, and semantic relationships.

- This pre-training allows the models to acquire a deep understanding of language.

- Fine-tuning for Specific Tasks:

- Once pre-trained, LLMs can be fine-tuned on specific tasks, such as text generation, translation, question-answering, and summarization.

- This involves training the model on a smaller, task-specific dataset to adapt its knowledge to the desired application.

Advantages of Transformer-Based LLMs

- Parallel Processing: Enables efficient training and inference.

- Long-Range Dependency Capture: Captures complex relationships between words and sentences.

- High Performance: State-of-the-art results on a wide range of NLP tasks.

- Flexibility: Can be adapted to various tasks with minimal changes.

By understanding the Transformer architecture and its role in LLMs, we can appreciate the significant advancements in natural language processing and the potential for future innovations in AI.

Explain the concept of “context window” in LLMs and its significance.

The context window in LLMs refers to the range of text (in terms of tokens or words) that the model can consider at once when generating or understanding language. The significance of the context window lies in its impact on the model’s ability to generate logical and relevant responses.

A larger context window allows the model to consider more context, leading to better understanding and text generation, especially in complex or lengthy conversations. However, it also increases computational requirements, making it a balance between performance and efficiency.

What are some common pre-training objectives for LLMs, and how do they work?

Common pre-training objectives for LLMs include masked language modeling (MLM) and autoregressive language modeling. In MLM, random words in a sentence are masked, and the model is trained to predict the masked words based on the surrounding context. This helps the model understand the bidirectional context.

Autoregressive language modeling involves predicting the next word in a sequence and training the model to generate text one token at a time. Both objectives enable the model to learn language patterns and semantics from large corpora, providing a solid foundation for fine-tuning specific tasks.

- Mock Deloitte GenAI Engineer Interview (30 mins)

- Why Your ML Interview Answers Sound Right but Still Fail

- 𝗬𝗼𝘂𝗿 Machine Learning 𝗝𝗼𝘂𝗿𝗻𝗲𝘆 𝗦𝘁𝗮𝗿𝘁𝘀 𝗛𝗲𝗿𝗲 – Top 24 Points to get Success

- How to generate SQL from Informatica Jobs XML using LLM

- New Tips – How to Prepare for Generative AI Interview

What is fine-tuning in the context of LLMs, and why is it important?

Fine-tuning in the context of LLMs involves taking a pre-trained model and further training it on a smaller, task-specific dataset. This process helps the model adapt its general language understanding to the nuances of the specific application, thereby improving performance.

This is an important technique because it leverages the broad language knowledge acquired during pre-training while modifying the model to perform well on specific applications, such as sentiment analysis, text summarization, or question-answering.

What are some common challenges associated with using LLMs?

Using LLMs comes with several challenges, including:

- Computational resources: LLMs require significant computational power and memory, making training and deployment resource-intensive.

- Bias and fairness: LLMs can inadvertently learn and propagate biases present in the training data, leading to unfair or biased outputs.

- Interpretability: Understanding and explaining the decisions made by LLMs can be difficult due to their complex and opaque nature.

- Data privacy: Using large datasets for training can raise concerns about data privacy and security.

- Cost: The development, training, and deployment of LLMs can be expensive, limiting their accessibility for smaller organizations.

How do LLMs handle out-of-vocabulary (OOV) words or tokens?

Large Language Models (LLMs) handle out-of-vocabulary (OOV) words or tokens using several strategies:

1. Subword Tokenization:

- Instead of whole words, LLMs break words into smaller units called subwords. This allows the model to handle OOV words by combining known subwords.

- For example, the word “unexpectedly” might be broken down into “un”, “expect”, “ed”, and “ly”. If the model encounters an OOV word, it can still process it by breaking it into subwords and using their embeddings.

2. Special Token for OOV Words:

- A special token like

<UNK>is assigned to represent OOV words. When the model encounters an OOV word, it replaces it with this special token. - The model’s learned representation for

<UNK>can help it make reasonable guesses about the meaning of the OOV word based on the context.

3. Character-Level Tokenization:

- In some cases, LLMs can tokenize text at the character level, treating each character as a token. This approach can handle OOV words effectively, especially for languages with complex morphology or rare words.

4. Contextual Embeddings:

- LLMs use contextual embeddings, which capture the meaning of words based on their context. This helps in handling OOV words, as the model can infer the meaning of an OOV word based on the surrounding words.

5. Pre-training on Massive Datasets:

- LLMs are trained on massive datasets, which increases the likelihood of encountering a wide range of words, including rare or domain-specific terms. This helps the model learn representations for a larger vocabulary.

6. Fine-tuning on Specific Tasks:

- When fine-tuning an LLM on a specific task, it can be exposed to domain-specific vocabulary, which can further improve its ability to handle OOV words in that domain.

By combining these techniques, LLMs can effectively handle OOV words and generate coherent and relevant text, even when encountering words that were not explicitly seen during training.

What are embedding layers, and why are they important in LLMs?

Embedding layers are a significant component in LLMs used to convert categorical data, such as words, into dense vector representations. These embeddings capture semantic relationships between words by representing them in a continuous vector space where similar words exhibit stronger proximity. The importance of embedding layers in LLMs includes:

- Dimensionality reduction: They reduce the dimensionality of the input data, making it more manageable for the model to process.

- Semantic understanding: Embeddings capture nuanced semantic meanings and relationships between words, enhancing the model’s ability to understand and generate human-like text.

- Transfer learning: Pre-trained embeddings can be used across different models and tasks, providing a solid foundation of language understanding that can be fine-tuned for specific applications.

Explain the concept of attention in LLMs and how it is implemented.

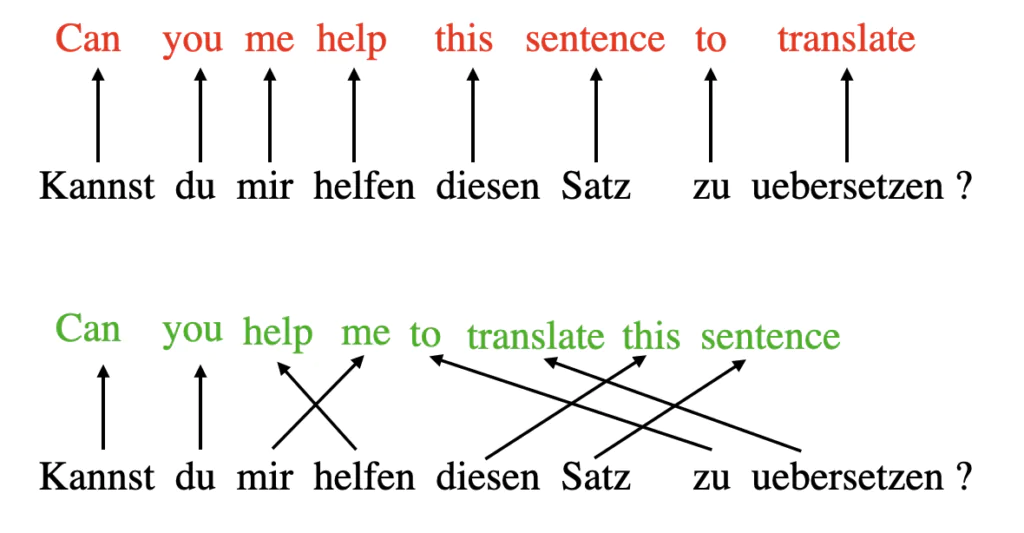

The concept of attention in LLMs is a method that allows the model to focus on different parts of the input sequence when making predictions. It dynamically assigns weights to other tokens in the input, highlighting the most relevant ones for the current task.

This is implemented using self-attention, where the model calculates attention scores for each token relative to all other tokens in the sequence, allowing it to capture dependencies regardless of their distance.

What is the role of tokenization in LLM processing?

Tokenization converts raw text into smaller units called tokens, which can be words, subwords, or characters.

The role of tokenization in LLM processing is vital as it transforms text into a format that the model can understand and process.

Effective tokenization ensures that the model can handle a diverse range of inputs, including rare words and different languages, by breaking them down into manageable pieces. This step is necessary for optimal training and inference, as it standardizes the input and helps the model learn meaningful patterns in the data.

How do you measure the performance of an LLM?

Researchers and practitioners have developed numerous evaluation metrics to gauge the performance of an LLM. Common metrics include:

- Perplexity: Measures how well the model predicts a sample, commonly used in language modeling tasks.

- Accuracy: Used for tasks like text classification to measure the proportion of correct predictions.

- F1 Score: A harmonic mean of precision and recall, used for tasks like named entity recognition.

- BLEU (Bilingual Evaluation Understudy) score: Measures the quality of machine-generated text against reference translations, commonly used in machine translation.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): A set of metrics that evaluate the overlap between generated text and reference text, often used in summarization tasks. They help quantify the model’s effectiveness and guide further improvements.

What are some techniques for controlling the output of an LLM?

Several techniques can be used to control the output of an LLM, including:

- Temperature: Adjusting this parameter during sampling controls the randomness of the output. Lower temperatures produce more deterministic outputs, while higher values return more varied results.

- Top-K sampling: Limits the sampling pool to the top K most probable tokens, reducing the likelihood of generating less relevant or nonsensical text.

- Top-P (nucleus) sampling: Chooses tokens from the smallest set whose cumulative probability exceeds a threshold P, balancing diversity and coherence.

- Prompt engineering: Crafting specific prompts to guide the model towards generating desired outputs by providing context or examples.

- Control tokens: Using special tokens to signal the model to generate text in a specific style, format, or content type.

What are some approaches to reduce the computational cost of LLMs?

To reduce the computational cost of LLMs, we can employ:

- Model pruning: Removing less important weights or neurons from the model to reduce its size and computational requirements.

- Quantization: Converting the model weights from higher precision (e.g., 32-bit floating-point) to lower precision (e.g., 8-bit integer) reduces memory usage and speeds up inference.

- Distillation: Training a smaller model (student) to mimic the behavior of a larger, pre-trained model (teacher) to achieve similar performance with fewer resources.

- Sparse attention: Using techniques like sparse transformers to limit the attention mechanism to a subset of tokens, reduces computational load.

- Efficient architectures: Developing and using efficient model architectures specifically designed to minimize computational demands while maintaining performance, such as the Reformer or Longformer.

What is the importance of model interpretability in LLMs, and how can it be achieved?

Model interpretability is essential for understanding how an LLM makes decisions, which is important for building trust, ensuring accountability, and identifying and mitigating biases. Achieving interpretability can involve different approaches, such as:

- Attention visualization: Analyzing attention weights to see which parts of the input the model focuses on.

- Saliency maps: Highlighting input features that have the greatest influence on the model’s output.

- Model-Agnostic methods: Using techniques like LIME (Local Interpretable Model-agnostic Explanations) to explain individual predictions.

- Layer-wise relevance propagation: Breaking down the model’s predictions into contributions from each layer or neuron.

How do LLMs handle long-term dependencies in text?

LLMs handle long-term dependencies in text through their architecture, particularly the self-attention mechanism, which allows them to consider all tokens in the input sequence simultaneously. This ability to attend to distant tokens helps LLMs capture relationships and dependencies over long contexts.

Additionally, advanced models like the Transformer-XL and Longformer are specifically designed to extend the context window and manage longer sequences more effectively, ensuring better handling of long-term dependencies.

Advanced LLM Interview Questions

Understanding advanced concepts in LLMs is useful for professionals who aim to push the boundaries of what these models can achieve. This section explores complex topics and common challenges faced in the field.

Explain the concept of “few-shot learning” in LLMs and its advantages.

Few-shot learning in LLMs refers to the model’s ability to learn and perform new tasks using only a few examples. This capability leverages the LLM’s extensive pre-trained knowledge, enabling it to generalize from a small number of instances.

The primary advantages of few-shot learning include reduced data requirements, as the need for large task-specific datasets is minimized, increased flexibility, allowing the model to adapt to various tasks with minimal fine-tuning, and cost efficiency, as lower data requirements and reduced training times translate to significant cost savings in data collection and computational resources.

What are the differences between autoregressive and masked language models?

Autoregressive and masked language models differ mainly in their prediction approach and task suitability. Autoregressive models, like GPT-3 and GPT-4, predict the next word in a sequence based on the preceding words, generating text one token at a time.

These models are particularly well-suited for text-generation tasks. In contrast, masked language models, such as BERT, randomly mask words in a sentence and train the model to predict these masked words based on the surrounding context. This bidirectional approach helps the model understand context from both directions, making it ideal for text classification and question-answering tasks.

How can you incorporate external knowledge into an LLM?

Incorporating external knowledge into an LLM can be achieved through several methods:

- Knowledge graph integration: Augmenting the model’s input with information from structured knowledge graphs to provide contextual information.

- Retrieval-Augmented Generation (RAG): Combines retrieval methods with generative models to fetch relevant information from external sources during text generation.

- Fine-tuning with domain-specific data: Training the model on additional datasets that contain the required knowledge to specialize it for specific tasks or domains.

- Prompt engineering: Designing prompts that guide the model to utilize external knowledge effectively during inference.

What are some challenges associated with deploying LLMs in production?

Deploying LLMs in production involves various challenges:

- Scalability: Ensuring the model can handle large volumes of requests efficiently often requires significant computational resources and optimized infrastructure.

- Latency: Minimizing the response time to provide real-time or near-real-time outputs is critical for applications like chatbots and virtual assistants.

- Monitoring and maintenance: Continuously monitoring model performance and updating it to handle evolving data and tasks requires robust monitoring systems and regular updates.

- Ethical and legal considerations: Addressing issues related to bias, privacy, and compliance with regulations is essential to avoid ethical pitfalls and legal repercussions.

- Resource management: Managing the significant computational resources required for inference ensures cost-effectiveness and involves optimizing hardware and software configurations.

How do you handle model degradation over time in deployed LLMs?

Model degradation occurs when the performance of an LLM declines over time due to changes in the underlying data distribution. Handling model degradation involves regular retraining with updated data to maintain performance. Continuous monitoring is necessary to track the model’s performance and detect signs of degradation.

Incremental learning techniques allow the model to learn from new data without forgetting previously learned information. Additionally, A/B testing compares the current model’s performance with new versions and helps identify potential improvements before full deployment.

What are some techniques to ensure the ethical use of LLMs?

To ensure the ethical use of LLMs, several techniques can be implemented:

- Bias mitigation: Applying strategies to identify and reduce biases in training data and model outputs, such as using balanced datasets and bias detection tools.

- Transparency and explainability: Developing models that provide interpretable and explainable outputs to foster trust and accountability, including using attention visualization and saliency maps.

- User Consent and privacy: Ensuring data used for training and inference complies with privacy regulations and obtaining user consent where necessary.

- Fairness audits: Conduct regular audits to evaluate the fairness and ethical implications of the model’s behavior.

- Responsible deployment: Setting guidelines and policies for responsible AI deployment, including handling harmful or inappropriate content generated by the model.

How can you ensure the security of data used with LLMs?

Securing data used with LLMs requires implementing various measures. These include using encryption techniques for data at rest and in transit to protect against unauthorized access. Strict access controls are necessary to ensure that only authorized personnel can access sensitive data.

Anonymizing data to remove personally identifiable information (PII) before using it for training or inference is also crucial. Additionally, compliance with data protection regulations like GDPR or CCPA is essential to avoid legal issues.

These measures help protect data integrity, confidentiality, and availability. This protection is critical for maintaining user trust and adhering to regulatory standards.

Can you explain how techniques like reinforcement learning from human feedback (RLHF) can be used to improve the quality and safety of LLM outputs, and what are some of the challenges associated with this approach?

RLHF is a technique that involves training an LLM to align its outputs with human preferences by incorporating feedback from human evaluators. This iterative process helps the model learn to generate responses that are not only accurate but also safe, unbiased, and helpful.

However, RLHF comes with challenges. One challenge is the potential for bias in the human feedback, as different evaluators might have varying preferences and interpretations.

Another challenge is the scalability of the feedback process, as collecting and incorporating large amounts of human feedback can be time-consuming and expensive. Additionally, ensuring that the reward model used in RLHF accurately captures the desired behaviors and values can be tricky.

Despite these challenges, RLHF has shown promising results in improving the quality and safety of LLM outputs, making it an important area of research and development in the field of prompt engineering.

More recently, an alternative to RLHF has emerged: Reinforcement Learning From AI Feedback (RLAIF).

LLM Interview Questions for Prompt Engineers

Prompt engineering is an important aspect of utilizing LLMs. It involves crafting precise and effective prompts to generate desired responses from the model. This section examines key questions that prompt engineers may encounter.

What is prompt engineering, and why is it crucial for working with LLMs?

Prompt engineering involves designing and refining prompts to guide LLMs in generating accurate and relevant outputs. It’s vital for working with LLMs because the quality of the prompt directly impacts the model’s performance.

Effective prompts can enhance the model’s ability to understand the task, generate accurate and relevant responses, and reduce the likelihood of errors.

Prompt engineering is essential for maximizing the utility of LLMs in various applications, from text generation to complex problem-solving tasks.

Can you provide examples of different prompting techniques (zero-shot, few-shot, chain-of-thought) and explain when to use them?

- Zero-shot prompting: Provides the model with a task description without any examples. Typically used when there are no available examples or when we want to test the model’s general understanding and flexibility.

- Few-shot prompting: Supplies a few examples along with the task description to guide the model. This is useful when the model needs context or examples to better understand the task.

- Chain-of-Thought prompting: Breaks down a complex task into smaller, sequential steps the model can follow. This can be beneficial for tasks that require logical reasoning and multi-step problem-solving.

How do you evaluate the effectiveness of a prompt?

Evaluating the effectiveness of a prompt involves:

- Output quality: Assessing the relevance, coherence, and accuracy of the model’s responses.

- Consistency: Checking if the model consistently produces high-quality outputs across different inputs.

- Task-specific metrics: Using task-specific evaluation metrics, such as BLEU for translation or ROUGE for summarization, to measure performance.

- Human evaluation: Involving human reviewers to provide qualitative feedback on the model’s outputs.

- A/B testing: Comparing different prompts to determine which one yields better performance.

What are some strategies for avoiding common pitfalls in prompt design (e.g., leading questions, ambiguous instructions)?

- Avoid leading questions: Ensure that prompts do not imply a specific answer, which can bias the model’s response.

- Clear and concise instructions: Provide unambiguous and straightforward instructions to reduce confusion.

- Context provision: Include relevant context to help the model understand the task without overloading it with unnecessary information.

- Iterative testing: Continuously test and refine prompts based on the model’s outputs and performance.

How do you approach iterative prompt refinement to improve LLM performance?

Iterative prompt refinement involves:

- Initial design: Start with a basic prompt based on task requirements.

- Testing and evaluation: Assess the prompt’s performance using various metrics and obtain feedback.

- Analysis: Identify weaknesses or areas for improvement in the prompt.

- Refinement: Make adjustments to the prompt to address identified issues.

- Repeat: Repeat the testing and refinement process until the desired performance is achieved.

What tools or frameworks do you use to streamline the prompt engineering process?

Several tools and frameworks can streamline the prompt engineering process:

- Interactive development environments (IDEs): Using IDEs like Jupyter Notebook for easy experimentation and visualization.

- APIs and SDKs: Leveraging APIs and SDKs provided by LLM providers (e.g., OpenAI API) for prompt testing and deployment.

- Automated testing frameworks: Implementing automated testing frameworks to evaluate prompt performance across various scenarios and inputs.

- Version control systems: Using version control systems (e.g., Git) to track changes and collaborate on prompt engineering projects.

- Visualization tools: Employing visualization tools to analyze model outputs and identify patterns or areas for improvement.

How do you handle challenges like hallucination or bias in LLM outputs through prompt engineering?

This question addresses the ethical and practical issues of LLM-generated content. A strong answer would demonstrate awareness of these problems and discuss techniques like:

- Fact verification prompts: Incorporating prompts that encourage the model to verify its information against reliable sources.

- Bias mitigation prompts: Guiding the model to consider diverse perspectives and avoid discriminatory language.

- Counterfactual prompts: Asking the model to generate alternative scenarios or perspectives to challenge its initial assumptions.

Can you explain the role of prompt templates and how they are used in prompt engineering?

Prompt templates provide a structured format for prompts, often including placeholders for specific information or instructions. They can be reused across different tasks and scenarios, improving consistency and efficiency in prompt design.

A good answer would explain how prompt templates can be used to encapsulate best practices, incorporate domain-specific knowledge, and streamline the process of generating effective prompts for various applications.

How does the choice of a tokenizer impact prompt engineering and model performance?

The tokenizer plays a crucial role in how the LLM interprets and processes the input prompt. Different tokenizers have varying vocabulary sizes and handle out-of-vocabulary (OOV) words differently. A subword tokenizer like Byte Pair Encoding (BPE) can handle OOV words by breaking them into smaller subword units, while a word-based tokenizer might treat them as unknown tokens.

The choice of tokenizer can impact model performance in several ways. For instance, a subword tokenizer might be more effective in capturing the meaning of rare or technical terms, while a word-based tokenizer might be simpler and faster for general-purpose language tasks.

In prompt engineering, the choice of tokenizer can influence how you structure your prompts. For example, if you’re using a subword tokenizer, you might need to pay more attention to how words are split into subwords to ensure that the model captures the intended meaning.

2 thoughts on “Top 20 Free LLM Interview Questions and Answers for 2025”

Comments are closed.